Executive summary

- By the beginning of 2021, Qrator Labs filtering network expands to 14 scrubbing centers and a total of 3 Tbps filtering bandwidth capacity, with the San Paolo scrubbing facility fully operational in early 2021;

- New partner services fully integrated into Qrator Labs infrastructure and customer dashboard throughout 2020: SolidWall WAF and RuGeeks CDN;

- Upgraded filtering logic allows Qrator Labs to serve even bigger infrastructures with full-scale cybersecurity protection and DDoS attacks mitigation;

- The newest AMD processors are now widely used by Qrator Labs in packet processing.

DDoS attacks were on the rise during 2020, with the most relentless attacks described as short and overwhelmingly intensive.

However, BGP incidents were an area where it was evident that some change was and still is needed, as there was a significant amount of devastating hijacks and route leaks.

In 2020, we began providing our services in Singapore under a new partnership and opened a new scrubbing center in Dubai, where our fully functioning branch is staffed by the best professionals to serve local customers.

Entering the second decade of Qrator Labs

As 2021 began, Qrator Labs celebrated its official ten-year anniversary. It was hard to believe for veteran colleagues that it has already been that long since we first opened.

Quick facts of what a company has achieved in 10 years:

- From 1 to 14 scrubbing centers on 4 continents, including Japan, Singapore, and the Arabian Peninsula;

- Entering 2021 the Qrator filtering network has approximately 3 Tbps of cumulative bandwidth dedicated to DDoS-mitigation;

- From 7 to almost 70 employees from eleven cities;

- And, we have saved, literally, thousands of customers.

… And all that with no third-party investment! Through all these years our company managed to follow a strictly self-sufficient growth and development path, thanks to the enormous effort of everyone involved throughout all these years.

Yet, we still do our best to preserve our core values: open-mindedness, professionalism, adherence to the scientific approach and customer focus. Although we always have unmet commercial objectives, the team remains most passionate about pursuing new technical solutions. With limited resources, we strive to remain focused on our primary research areas, which actually have not changed: the world of BGP routing and DDoS-attack mitigation.

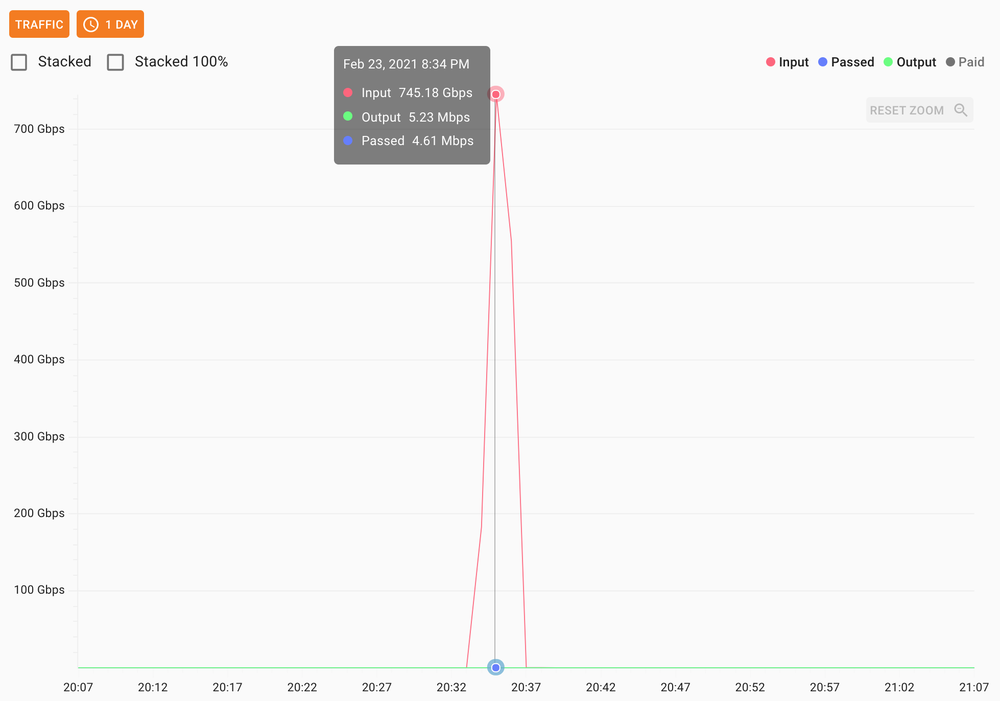

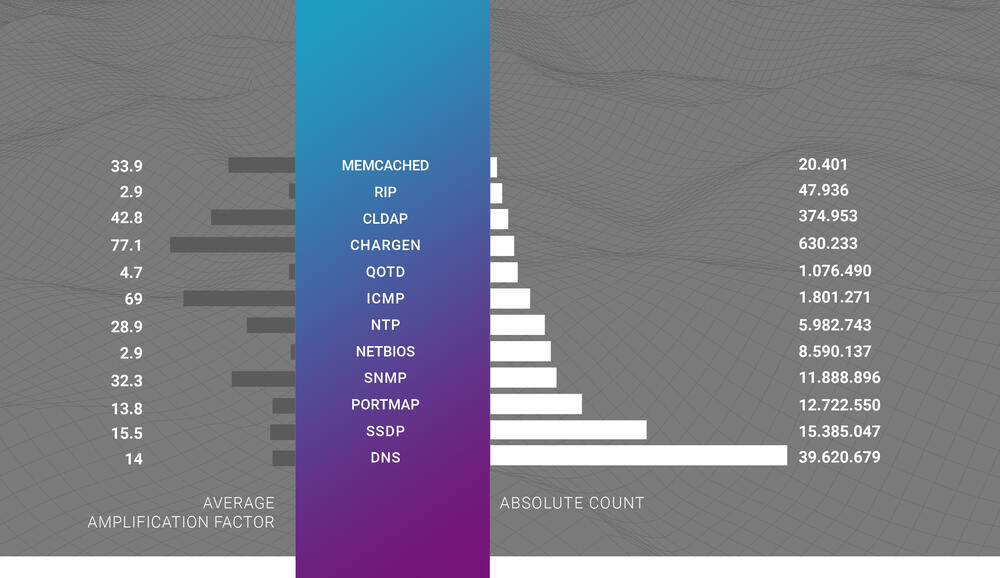

2021 started with a bang - a 750 Gbps DDoS attack consisting mostly of DNS amplified traffic. This particular attack vector is one of the oldest and best known, yet still capable of wreaking havoc with tremendous effectiveness.

Improvements behind filtering logic

Over the course of 2019-2020 we put a lot of effort into improving the filtering logic behind our DDoS attack mitigation service. We realized gains in the overall efficiency of 4-8x, using 25-33% less RAM than before, depending on specific traffic flow parameters. This means that we are now able to take more legitimate traffic onboard and work with the biggest customers in the world.

We significantly upgraded our attack detector - an algorithm that actually helps confirm an ongoing attack. Now we have a broader choice of parameters available for user customization serving as the blacklisting feeds. It also enables us to mitigate attacks that are closer to L7, compared with L3-L4.

Request Broker - a solution that simplifies the WAF adoption process. We improved the processes of interacting with various WAF solutions on the Qrator filtering network and making asynchronous decisions related to questionable requests.

The Proxy Protocol support was integrated with the Qrator filtering network in 2020. It facilitates DDoS mitigation for applications using, for example, WebSockets. Besides just simplifying the conjunction of any proxy with the Qrator network, working out of the box with HAproxy and NGINX and any solution working on top of the TCP simplifies the conjunction between the Qrator filtering network and any service that supports the Proxy Protocol.

AMD inside Qrator Labs

We regard the new AMD processors as a great fit with the Qrator hardware architecture. The unique characteristic of the AMD CPUs - hierarchical core separation on a chip - is quite effective for the tasks performed by Qrator Labs. Core blocks with separate memory controllers present a particular challenge to set up correctly because we change how the OS sees the CPU cores. The new AMD processors' huge advantage lies in the fact that we can now build a single socket machine with the power of the older two-socket hardware installation. With one NIC inside a machine that's connected through a PCIe connector, two CPUs present a specific load splitting challenge, in addition to the switching latency between two CPUs. That is serious asymmetry within the system. Replacing two CPUs with one CPU solves this specific problem, leaving the need to tweak only the core (hierarchical) architecture within one CPU, which is much easier.

There's one more peculiarity with an AMD CPU. The model we use supports memory work at the frequency of 3200 GHz. When we started testing these CPUs, we discovered that, formally, the memory controller allows 3200 GHz workloads, but the in-CPU bus that connects cores works at a lower frequency. Effectively this means that if we are using a 3200 GHz CPU clock, the bandwidth drops significantly because of the lower frequency memory controller clock. The only application that would benefit from this should be a pure single thread activity with only one memory channel.

Other than that, we are now employing AMD CPUs on all new points of presence because of their cost-efficiency.

Partner technologies integrations

In 2020 we integrated new partner services with the fabric of the Qrator filtering network.

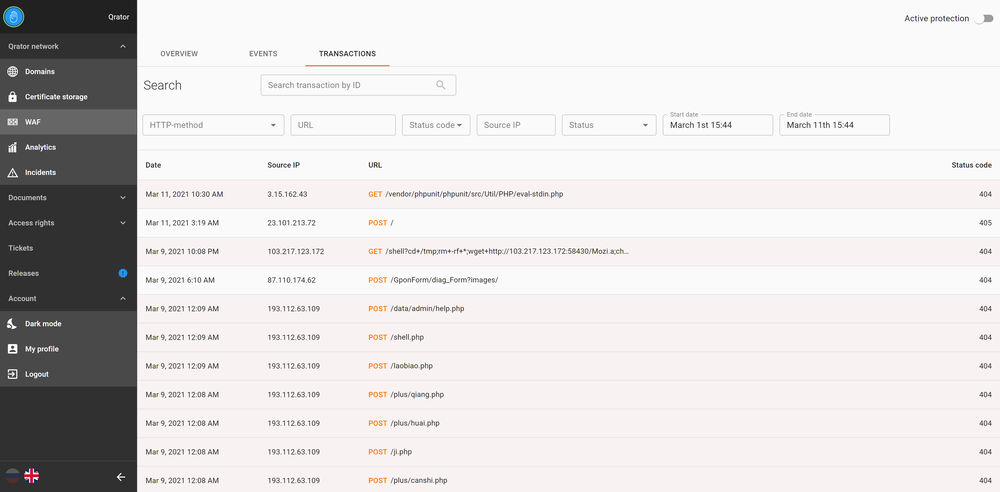

The new WAF from SolidWall, which is now functional within Qrator Labs' services. SolidWall WAF is integrated into the Qrator customer dashboard, allowing customers to manage the firewall service fully in one place with other Qrator Labs services.

And the RuGeeks CDN service, which is now available for all Qrator Labs customers after extensive testing and development.

The customer dashboard experienced significant changes, updates and upgrades during 2020. We've invested significant efforts into giving as many customization options as possible directly to our users to most effectively manage the services for their protected resources.

Filtering in IPv6

By the end of 2020, native IPv6 adoption globally surpassed 30%, according to Google. Now it is in a crucial phase of either achieving universal adoption or failing as a replacement for IPv4. We believe that IPv6 is here to stay, which is why our team has been investing time and effort into fully supporting the 6th version of the IP protocol for the past several years.

In Qrator Labs, one of our network engineering tasks is to find the heaviest elements within the flow, i.e., the most intensive ones, which we call the "heavy hitters." First, let's explain why we solve this particular problem: as a DDoS attack mitigation company, our primary job is to deliver legitimate traffic to its owner while diverting malicious or illegitimate traffic from its intended target.

In the case of IPv4 traffic, we can solve the problem without searching for the heaviest element – it is only necessary to analyze all flow to the target to determine the "legitimacy" of each sender.

The situation is quite different for IPv6 traffic.

"If we need to store the full IPv6 address pool, it would take a tremendous amount of memory.

If we could store each IPv6 address using only 1 bit of memory, it would take approximately 2^128 bits for the entire address pool. This number is greater than the number of atoms on Earth.If we could store a /64 network prefix using only 1 bit of memory, it would require 2^64 bits for the entire address pool. It's a less astronomical number than the previous one and could be compared to all the produced memory in all the devices manufactured in the world up to this moment."

Looking at IPv6, it is clear from the outset that the number of possible packet sources could be astronomical. And the same is true for their potential destinations.

Since Qrator Labs' specialty is to maintain the required level of customer service availability, this could also be viewed as maintaining the specific threshold in terms of either packet rate or bit rate received by the customer. We shouldn't pass traffic that exceeds those limits, as this could result in harmful consequences for the customer.

Taking these considerations into account, we can properly redefine the problem. It becomes clear that the primary objective is to quickly and easily (which means cheaply from the resources perspective) identify the elements within the flow that should be discarded in order to remain within the required limits. To achieve the highest quality standards, we design our system to avoid mistakenly blocking legitimate users during a DDoS attack.

We are addressing this complex issue with several tools, as only one solution does not suffice. We can switch between them or use all of them simultaneously. The approach involves a combination of algorithms and techniques that limit either the packet rate or bandwidth from a separate source.

And since IPv6 does not allow "blacklisting" or "whitelisting", it turns out to be a tough challenge.

Looking at existing literature on this topic, we find enough research on different algorithms, such as the "search for the heavy hitters" algorithm in network traffic filtering. But limiting the packet rate and bandwidth from a source is a much broader task, which is why we speak of "threshold" and "exceeding" or "holding the threshold". And we want to maximize our QoS parameter in doing so in order to ensure that only harmful traffic is discarded.

Qrator Labs has developed a full-scale simulator to test the environment. It generates traffic flows to enable the application of various combinations of filtering algorithms and measurement of the outcome and quality. We intend to make this testing framework available publicly when it is ready.

We are building a "traffic intensity" meter. We are working towards a device that not only "counts" but also demonstrates the current intensity in whatever metrics we want. It is only possible to measure the intensity of certain types of flows, like those that are "uniform" or "static". In our case, the flow is dynamic, which is hard to define, let alone identify or measure its intensity. Counters use memory to store the value. As mentioned above, the resources used are finite, so we must choose carefully so that our counter never exceeds a particular memory limit by its design.

"With the simplest incremental counter, the main issue is that historical (previous) data affects the current observation. In case something is happening right now, you cannot separate the previous data from the current ones by just looking at the counter value."

To successfully solve this highly complex task, we first need to rank the flow elements according to their intensity. It is difficult to define this intensity mathematically due to its dynamic or changing in-stream characteristics when measured in terms of traffic to a specific server.

After a great deal of effort, we created and are implementing what we believe to be a harmonious theory for the use of counters of different types with differences in architecture to measure "intensity." The accuracy of counters varies across different types and situations. There is no specific mathematical definition of "intensity" - this is a point worth repeating as it is crucial to understand why we do such extensive research on the topic. This is why we need to evaluate multiple counters to determine which is the best fit for the specific situation under consideration. In the end, the goal is not to build the most accurate counter - but to provide the highest quality of service in terms of DDoS attack mitigation and overall availability of the defended networking resource.

Statistics, and further analysis of DDoS attacks data

The median DDoS attack duration is around 5 minutes, which hasn't changed very much since we changed the methodology by which we count and separate one attack from another. The 750 Gbps DNS amplification DDoS attack that we mitigated in February 2021 lasted 4 minutes.

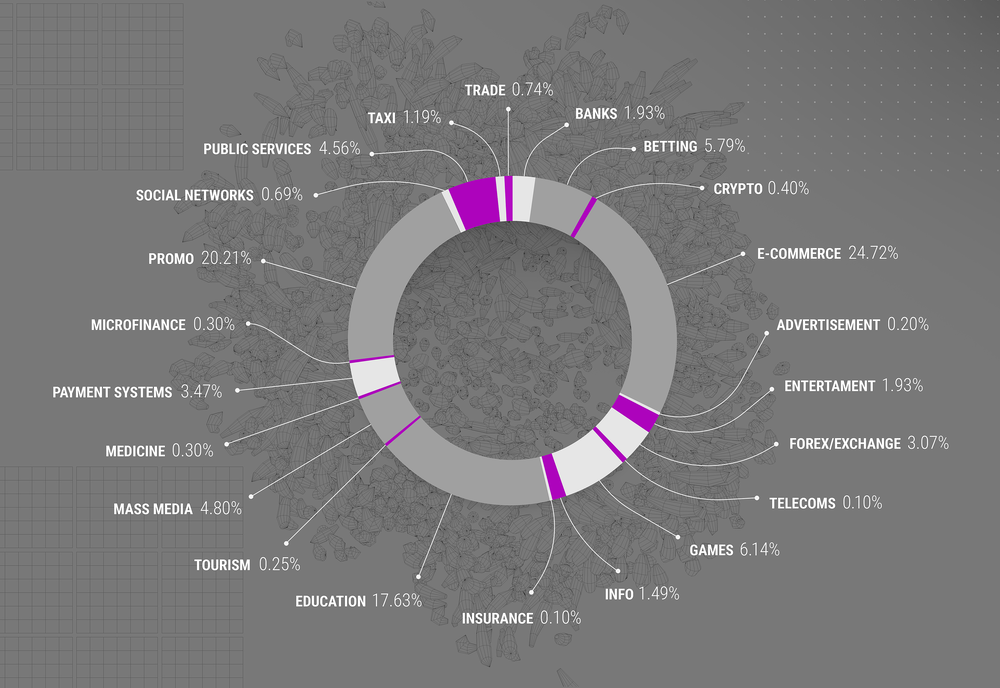

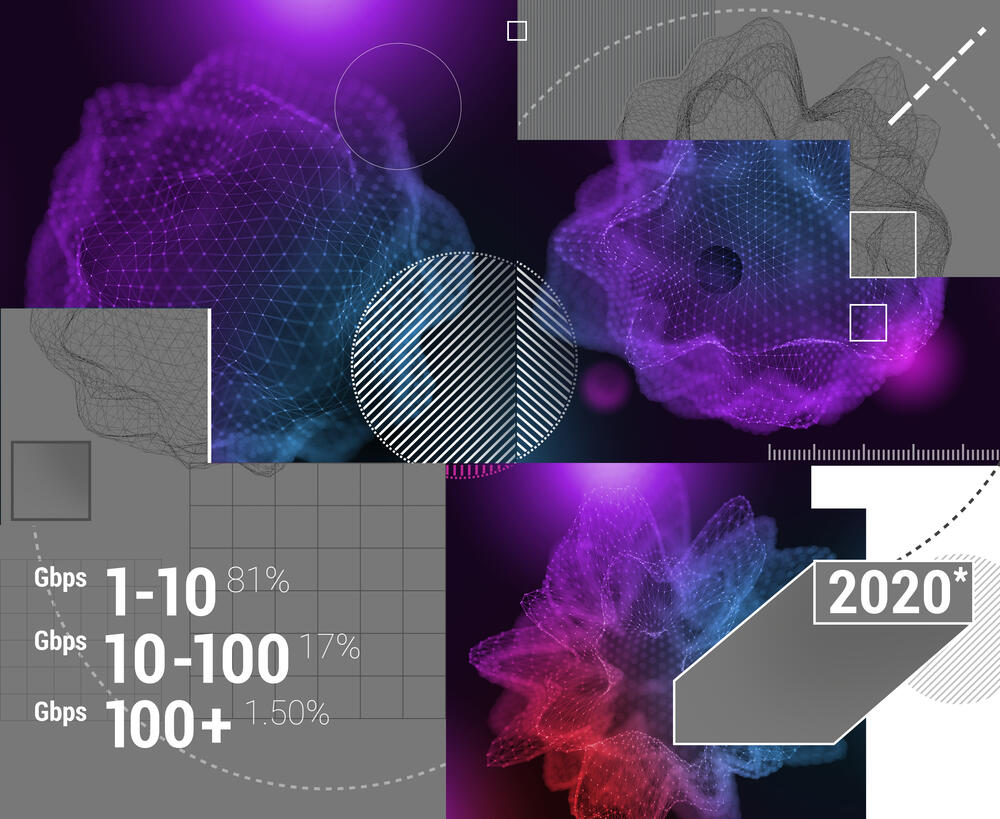

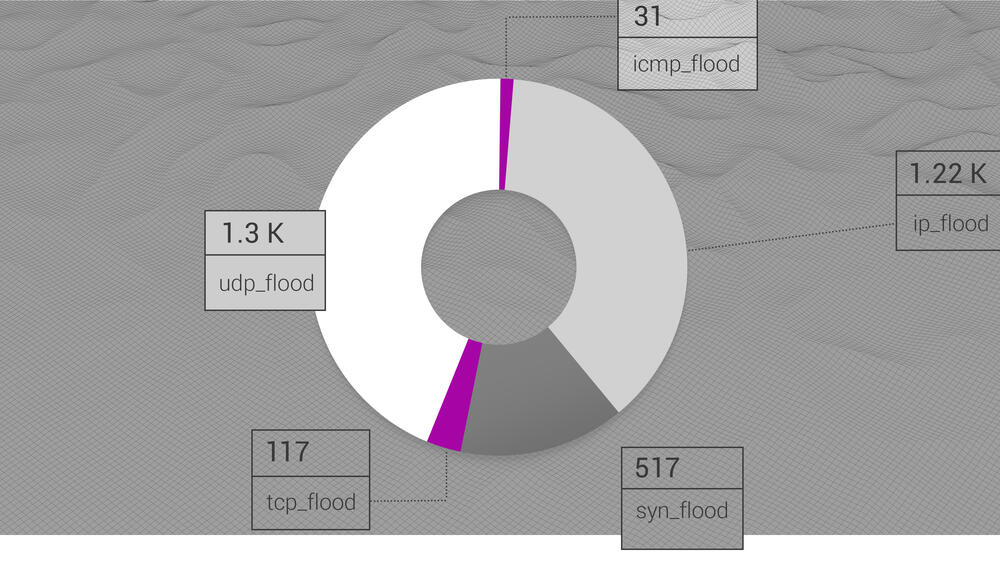

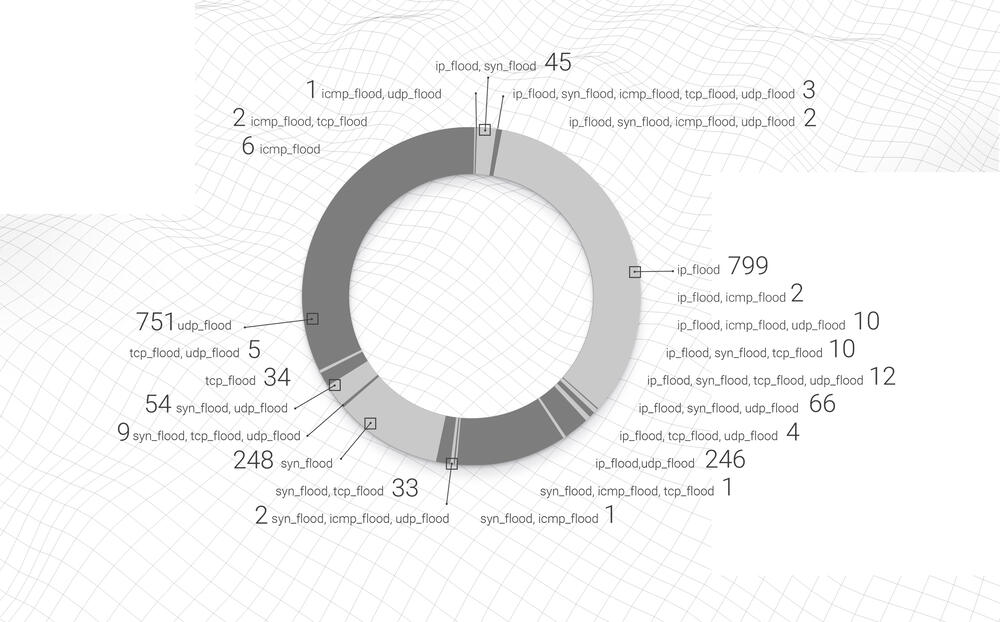

The distribution of attack vectors shows the dominance of UDP flood-based DDoS attacks in 2020, with 40.1% of all observed attacks. IP flood stands in second place with 38.15%, and SYN flood closing the top-3 vectors for L3 attacks with 16.23% of attacks for the year.

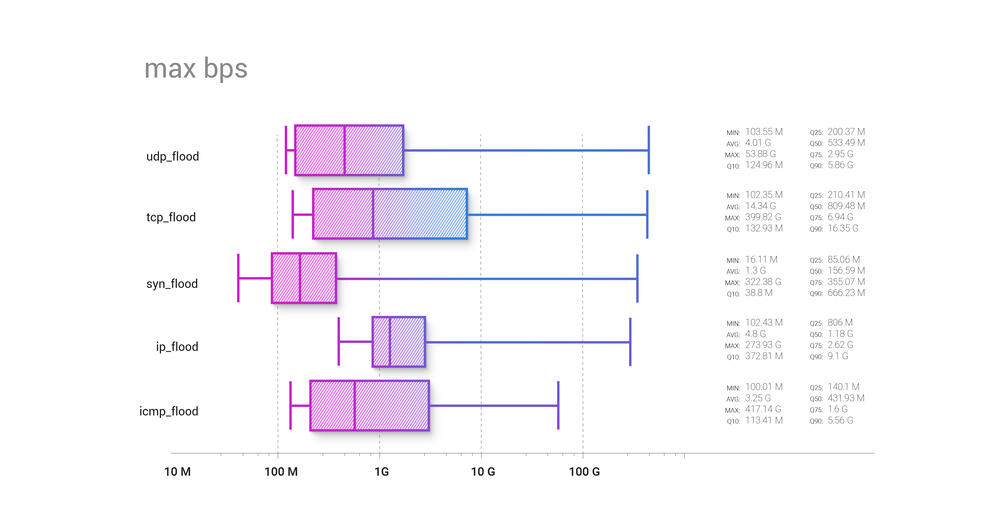

The attack vector bandwidth graph illustrates the differences between attacking vectors and shows that IP flood starts highest on the bandwidth distribution, compared to the others, with a median level above 1 Gbps.

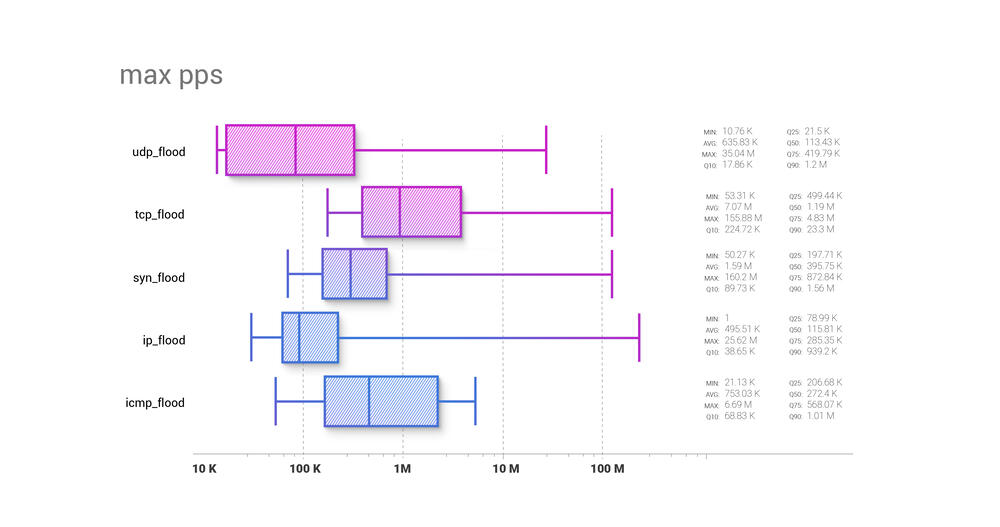

The comparison of attack vector packet rates shows that UDP flood-based DDoS attacks can start from the lowest packet rates possible, with TCP flood showing the opposite. Their median levels of pps are 113.5k and 1.2m, respectively.

This is a concurrent snapshot of the vector combinations:

As you can see, even in real-world DDoS attacks the major vectors do not mix and, for the most part, stay "clean" of combinations, with pure UDP flood and IP flood combining to account for ⅔ of all the DDoS attacks in 2020.

Diving into BGP

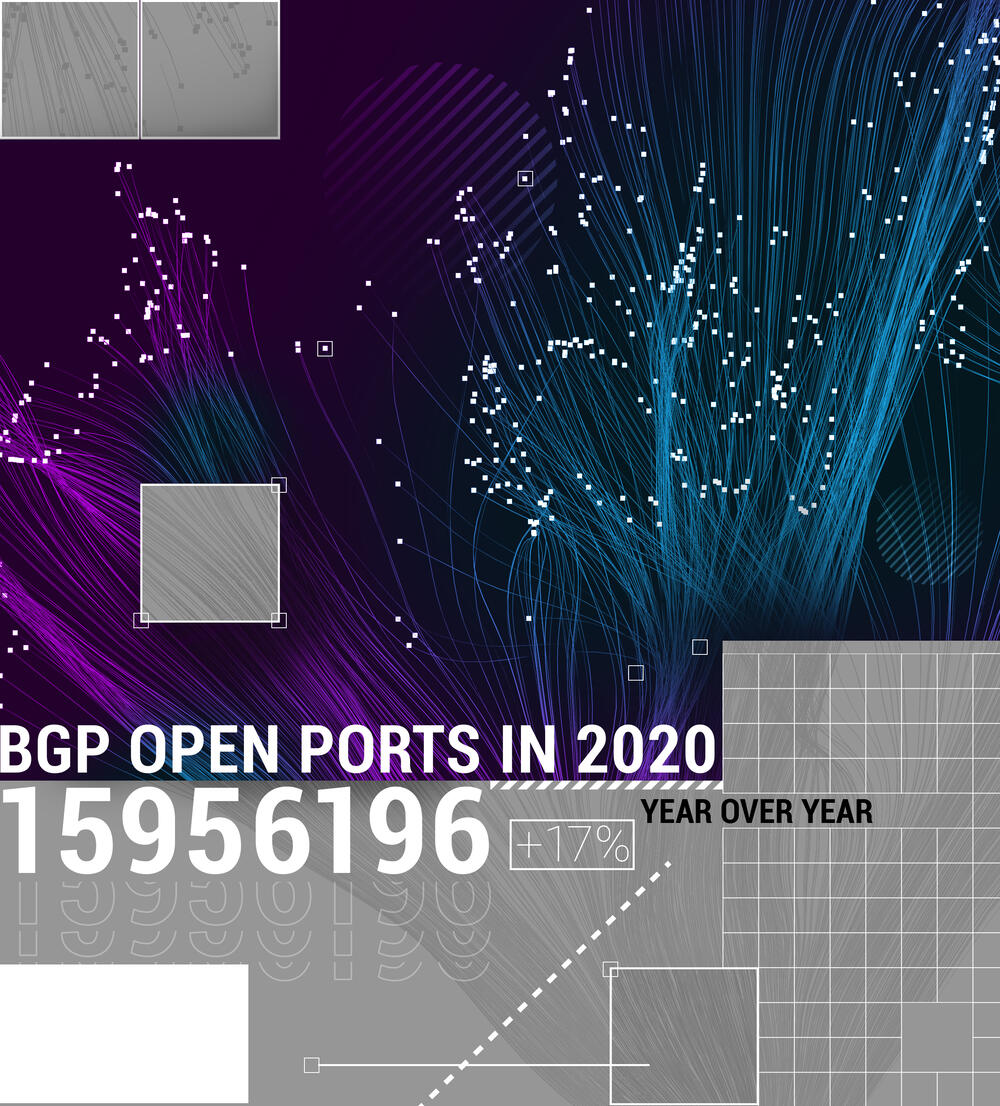

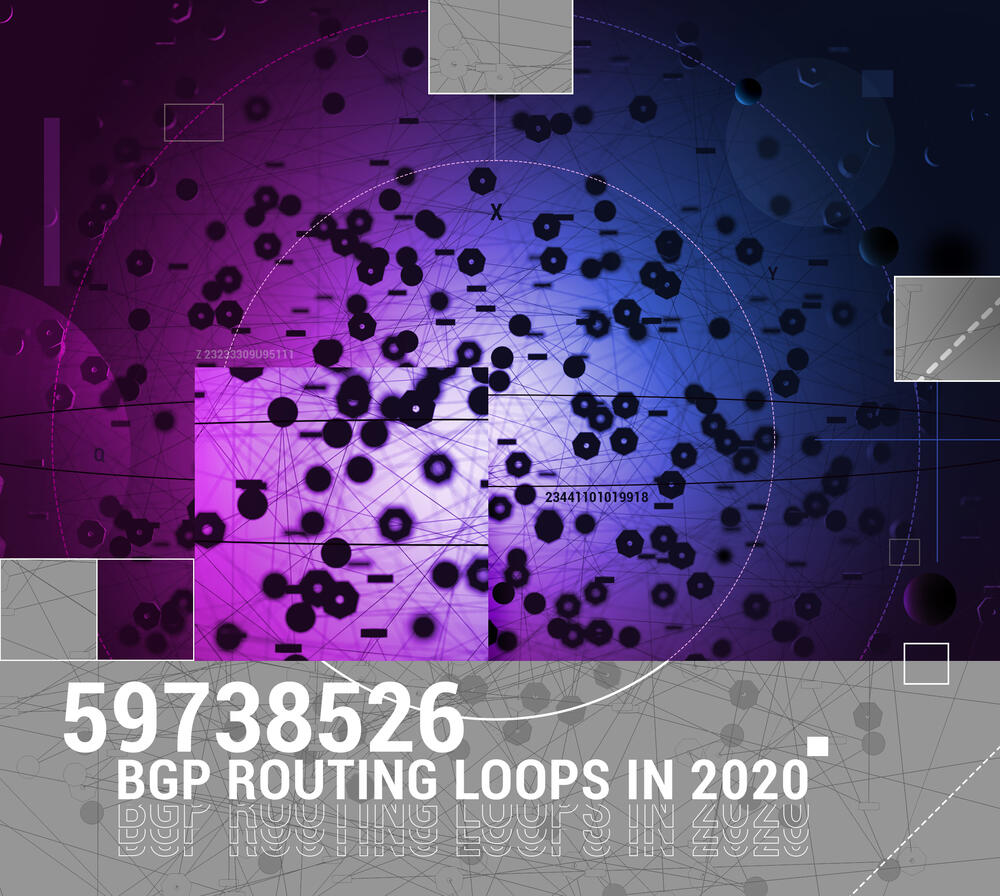

The amount of BGP open ports and BGP routing loops stayed high for the last 5 years. Apparently, this particular issue is not bothering the majority of ISPs managing an autonomous system.

The situation with BGP hijacks and route leaks is different, however. In the report we made in May 2020 on the Q1 of the previous year, we pointed out the BGP routing leaks statistics:

January 2020: 1

February 2020: 4

March 2020: 6

April 2020: 6 +1 in IPv6

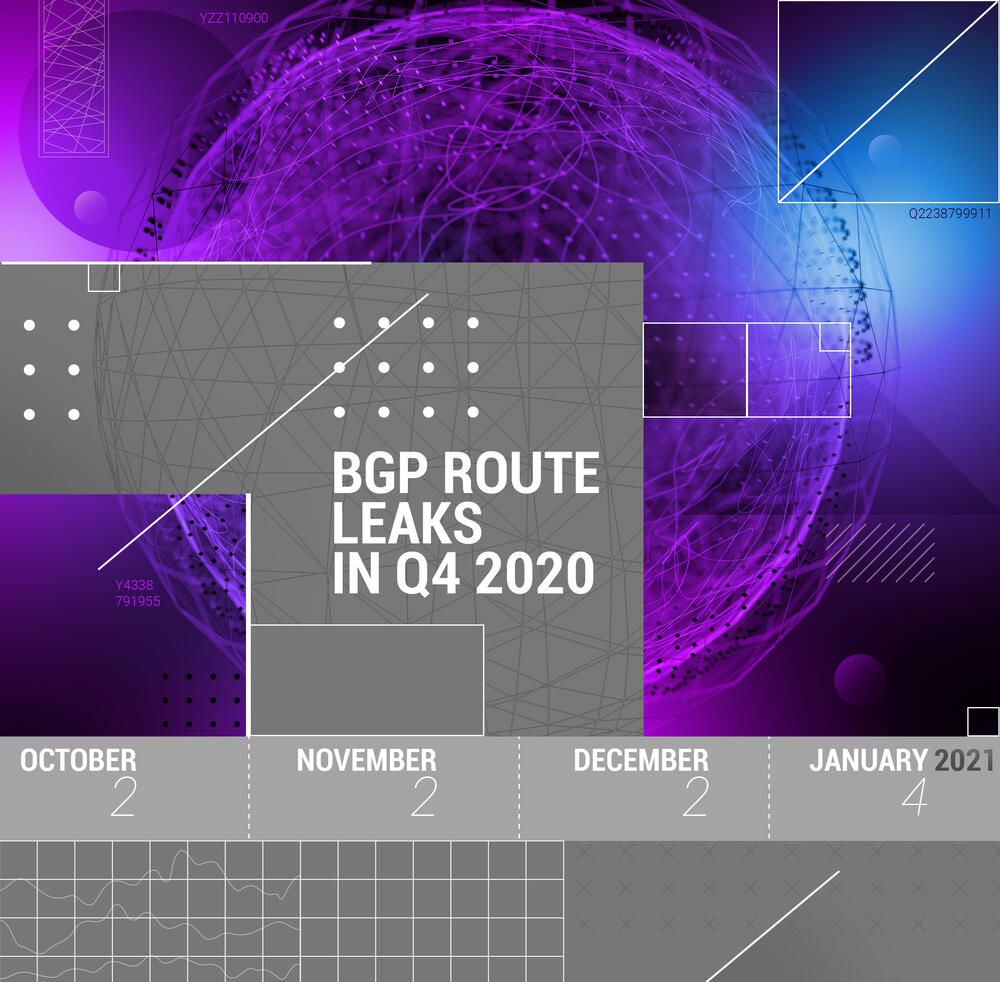

Now we can take a look at the Q4 numbers with an additional January 2021 data for the same BGP routing leaks:

Please note that we are counting only such incidents that the Qrator.Radar team considers being global, exceeding certain thresholds. Comparing Q4 data with the Q1 for 2020, it looks like there is a slight drop in the absolute number of route leak incidents, although it is valid only if we consider them to be of the same size, which is usually quite the opposite in real life. As always, larger networks are capable of affecting peers and customers more rapidly. That effectively means that there is little chance for change until we have a working solution to prevent routing leaks from happening. Hopefully, ASPA and all related drafts would be accepted and recognized as RFCs in the nearest future.

Link for downloading the PDF-version of the report.