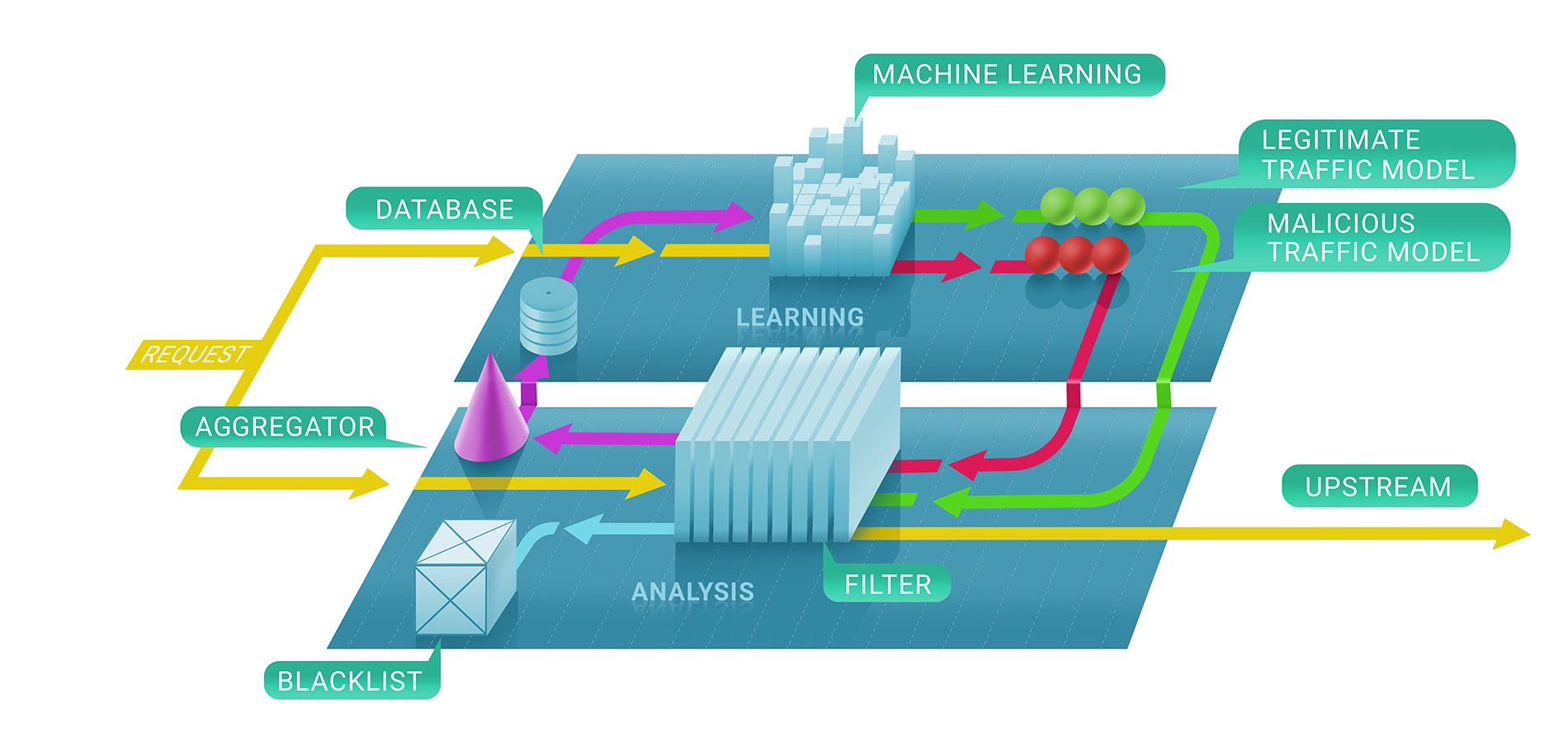

In general, Qrator Labs filtering service involves two stages: first, we immediately evaluate whether a request is malicious with the help of stateless and stateful checks, and, secondly, we decide whether or not to keep the source blacklisted and for how long. The resulting blacklist could be represented as the list of unique IP-addresses.

In the first stage of this process, we enlist machine learning techniques to better understand the natural flow of traffic for a given resource, since we parametrize the service for each customer individually based on the data we collect.

That is where ClickHouse comes in. To understand precisely why an IP-address was banned from communicating with a resource, we have to follow the machine learning model to ClickHouse DB. It works very fast with big amount of data (consider a 500 Gbps DDoS attack, continuing for a few hours between pauses), and stores them in a way that’s naturally compatible with the machine learning frameworks we use at Qrator Labs. More logs and more attack traffic leads to better and more robust results from our models to use in further refining the service in real time, under the most dangerous attacks.

We use the ClickHouse DB to store all the attack (illegitimate) traffic logs and bot behavior patterns. We implemented this specific solution because it promised an impressive capacity for processing massive datasets in a database style, fast. We use this data for analysis and to build the patterns we use in DDoS filtering and to, apply machine learning to improve the filtering algorithms.

ClickHouse is a column-oriented DB focused on delivering maximum processing speed for analytical workloads. Advantages over other systems are achieved via the utilization of modern algorithms with thorough low-level optimization for the hardware. Combined with extensive data analysis capabilities that go far beyond traditional databases, ClickHouse is a unique solution perfectly fitting our tasks. And it works, fast!