As many readers of the Qrator Labs blog know, DDoS attacks target aims at different network levels. In particular, a substantial botnet presence allows an intruder to carry out attacks on the L7 (application layer) and mimic regular users. Without such a botnet the attacker is forced to limit packet attacks (any of those allowing the source address forgery at some stage of execution) to the underlying transit networks levels.

Naturally, in both these scenarios attacker tends to use some existing toolkit — just like a website developer does not write it entirely from scratch, using familiar frameworks like Joomla or Bootstrap (or something else depending on one’s skills). For example, the well-known framework for executing attacks from the Internet of Things for a year and a half is Mirai, open-sourced by its authors in an attempts to shake the FBI off the tail in October 2016.

Also, for the packet attacks, such a popular framework is the built in Linux pktgen module. Of course, created for another purpose — entirely legitimate network testing and administration, but as Isaac Asimov wrote, “an atom-blaster is a good weapon, but it can point both ways.”

The peculiarity with pktgen is that “out of the box” in can generate UDP traffic solely. For the network testing purposes that is quite sufficient, besides Linux kernel contributors do not want to simplify random shooting in both directions. That somewhat simplified the DDoS attacks mitigation — some attackers registered correct ports in pktgen but failed to pay attention to the resulting IP protocol values. As a result, a significant percentage of packet attacks targeted TCP-services (like HTTPS using port 443/TCP) with UDP datagrams. Such illegitimate and parasitic traffic was easy and cheap to discard at distant points (with BGP Flowspec), so it would never reach the last mile to the resource under attack.

But things change when Google comes.

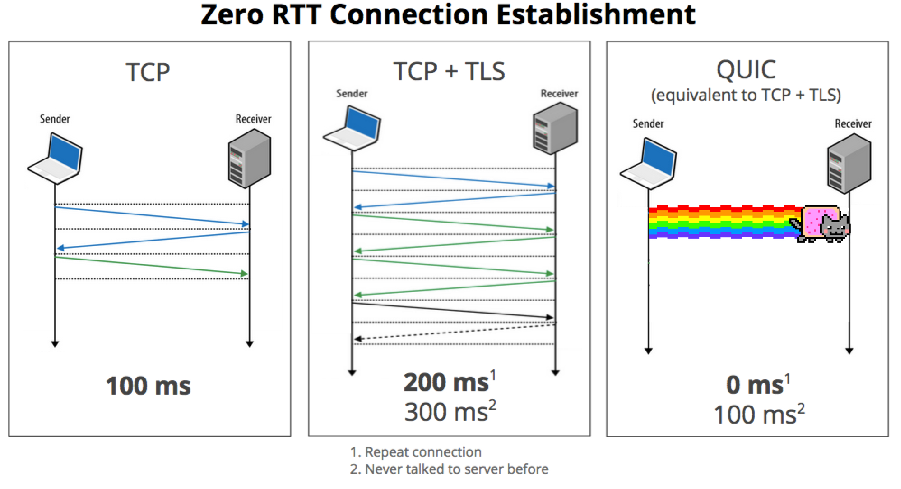

Let’s consider the QUIC protocol specifications, developed since 2013 as a TCP replacement. QUIC allows multiplexing several data streams between two computers, working on top of the UDP protocol and containing encryption capabilities equivalent to those of TLS and SSL. It also has a lower connection and transmission delays compared to TCP. It tolerates the packet loss well by aligning cryptographic blocks boundaries with the packet ones. The protocol also includes the possibility of error correction (FEC) on a packet level, which is in practice disabled (much of this comes from Wikipedia).

Over the past few years, Google has developed a bunch of protocols designed as the replacement for the World Wide Web old protocols stack. QUIC works on top of all known UDP transport protocol, and at the moment we see individual cases for QUIC integration into working and loaded projects by individual “enthusiasts.” Google Chrome, as well as the Google application servers, already support QUIC, explaining the enthusiasm. According to some telecom operators the protocol, released in 2013, already accounts for a more significant share of their traffic than the IPv6, introduced in the 1990s.

At the moment all attempts of QUIC implementation are mostly userspace programs, which from the kernel point of view (not to mention all the transit devices and Flowspec) that is (drum roll) UDP to port 443.

This integration highlights the flaw in such an approach — filtering packet attacks on one equipment, with higher level attacks scrubbing elsewhere (usually with weaker equipment). It is clear that building such a solution could be cheap and self-assuring, but it is worth noting that those decisions cement the historically developed disbalance in World Wide Web. The notion of a “historically developed disbalance” regarding an industry that is 25 years old just sounds silly.

Let’s imagine that the situation changes — business guys would come to developers, requiring the new-fashioned QUIC protocol, because now Google ranks QUIC-enabled-websites higher. That has not happened yet, but it may, by 2020. As a result of such an introduction, as in the old joke about the Kremlin plumber, there would be a need to change everything.

In prison, two people are talking:

- Are you on political or criminal grounds?

- On political. I am a plumber. They summoned me to the Kremlin. I looked around and said: “The whole system here needs replacement.”

On the other hand, the QUIC protocol is developed reasonably. In particular, it does not allow amplification attacks by design, in contrast to many other UDP-based protocols like DNS. QUIC does not unnecessarily increase the traffic of responses to requests from an unconfirmed source. A handshake is present in the specification.

The primary goal of the handshake is to make it as efficient and straightforward as possible to make sure that requests are coming from the IP address being written in the IP packet “source” field. That is necessary to simply pass other packets by the server and the network, without any resource lost on fake clients. Besides, that eliminates the use of the server as an intermediate unit in the amplification attack, when the server sends significantly more data to the victim’s address (faked “source” field) that would be required for the attacker to generate on victim’s behalf.

Adam Langley and Co created a brilliant example for everyone trying to create UDP-based protocols (hello, game developers!). At the current protocol draft for QUIC a whole section is dedicated to the handshake. In details, the first packet of a QUIC connection cannot be less than 1200 bytes, since it is precisely the size of a server handshake response.

Until the connection established (when the authenticity of the client’s IP address confirmed) QUIC client cannot cause the server to transmit more packets than the client. Naturally, handshake mechanism offers a convenient target for attackers. The infamous SYN flood attack is aiming explicitly at the TCP triple handshake process. Therefore it is vital for the handshake design to be optimal in both algorithmic complexity and the hardware proximity.

That was not the case of the TCP protocol, where the sufficient handshake (SYN cookies) was introduced much later by the famous Daniel Bernstein and is, in fact, based on hacks even obsolete clients support. It is funny, but as you can see from this publication, the QUIC protocol became the target before the final release. Therefore, while implementing QUIC in production, it is necessary to ensure that its handshake is integrated as efficiently as possible and close to the processing hardware.

Otherwise, those attacks that kernel handled before (with Linux supporting SYN cookies for quite a time) would have to be processed with Nginx or, moreover, IIS. It is unlikely to cope with those appropriately.

Every year network becomes more complicated. These challenges must be met by everyone and especially engineers involved in the development and implementation of new/er protocols. In that process, it is crucial to take security risks into account and solve them in advance, on paper, without waiting for the actual threat actualization. Worth noting that it is still essential to provide support for any protocol, including QUIC, at a level close to the hardware.

The protection of the system designed over the QUIC protocol would be quite a task until all such issues detected, analyzed and solved. Same applies to other newfangled protocols like HTTP/2, SPDY, and even IPv6. In the future, we might undoubtedly see more inspiring examples of the new generation protocols.

We would certainly keep you informed.