On the border of July 29 and 30, depending on where in the world you were, a routing anomaly occurred. Following the NANOG question regarding what exactly was happening, Qrator.Radar team loaded the researching instruments and dived into the investigation. Nevertheless, before we start, let us take a general overview of that play's main actors.

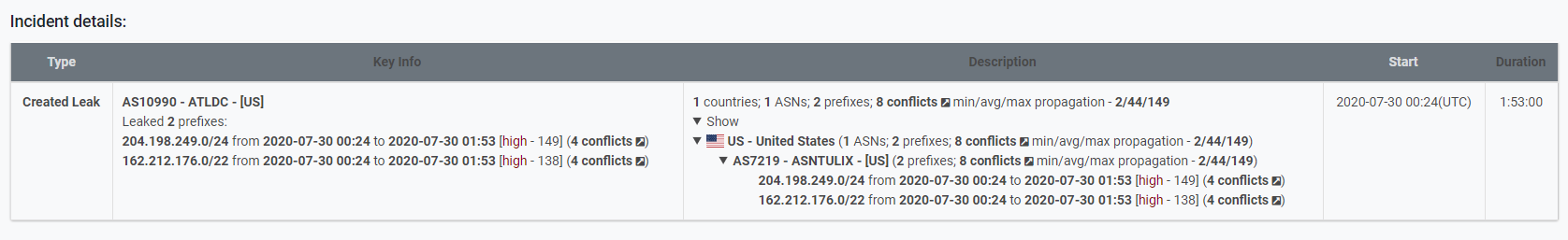

Everything started with an event that at first seemed as unrelated - at 00.24 UTC Tulix leaked two prefixes from its own upstream ASN - from AS 7219 to AS 10990. According to the data we operate, after an hour and a half, at 01.53 UTC an announcement was withdrawn, patching the leak. It is crucial to understand how we perceive the connection between 7219 and 10990 - 10990 is much "smaller" or has weaker connectivity, but everything started exactly with 10990.

Now let's take a look at the company in question - Tulix Systems Inc., the owner of both ASNs. According to their Crunchbase profile, the company: "provides peerless CDN services to the major Tier 1 backbone providers as well as to over one hundred national and regional providers. Combined with high-powered streaming and routing optimization, Tulix Systems CDN services are optimum for live streaming and which this quality carries over to all levels including on-demand services."

If you read our incident reports often enough you probably know that we always trigger on the "routing optimization" phrasing, because up to this moment we saw several severe incidents that are supposed to be connected to the "routing optimization" software or techniques, rather than simple and more common configuration mistakes.

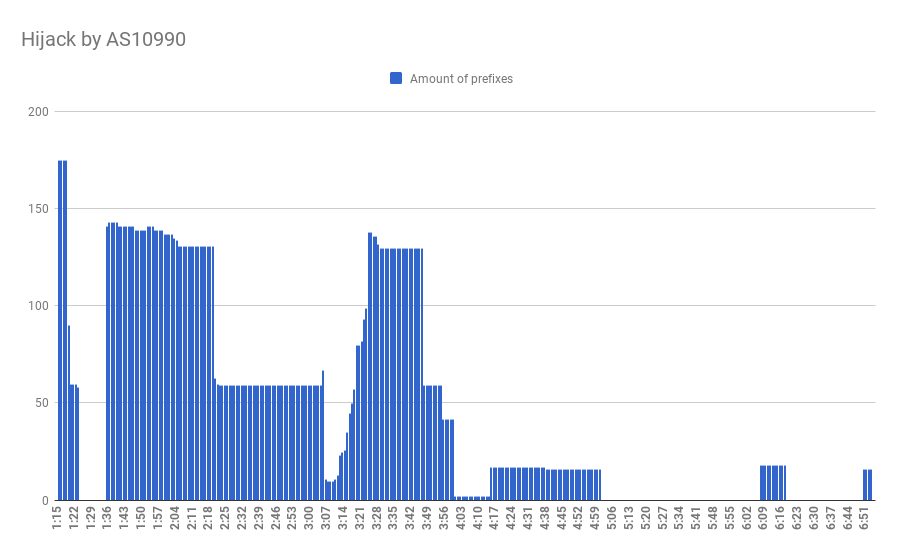

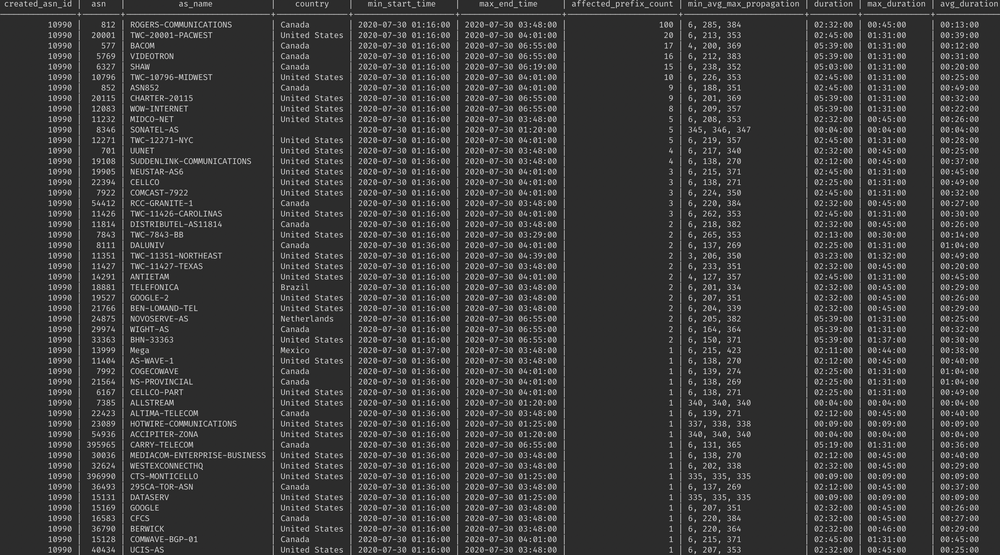

Because what happened next was both suspicious and dangerous - approximately at 01.16 UTC AS10990, one of two customers (downstreams) of AS7219, both Tulix Systems Inc., announced 201 prefixes belonging mostly to Canadian and US-based autonomous systems, although rather high-profile, including Google, Neustar, Comcast; Canadian Carry Telecom; Brazilian Telefonica and a bunch of other smaller companies, thus creating a massive hijack.

Totally six countries, 51 ASes and 285 prefixes were affected. The hijack persisted from 1.15 to 3.42 UTC, propagating to routing tables of more than 435 ASes at its peak. The total duration of the incident was more than 5 hours.

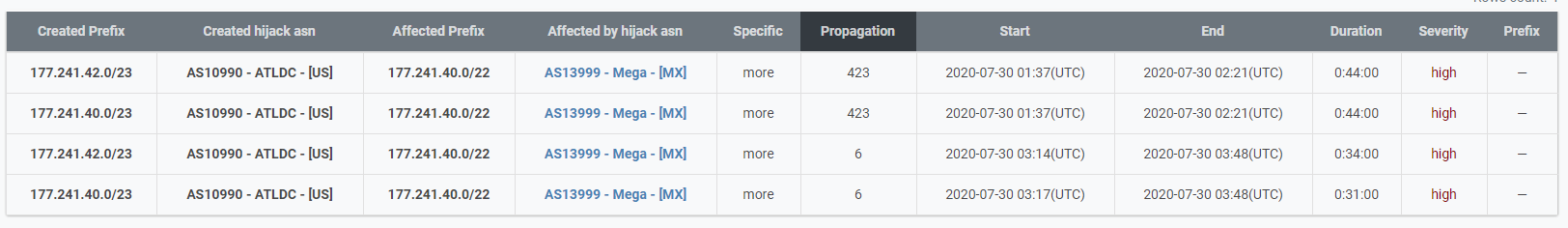

How can we find the presence of a routing optimization software within some network? As we wrote multiple times before, the most visible trait or can we say characteristic of such software is the extensive use of more specific network prefixes compared to previously announced. Furthermore, in this particular case, we saw the same more specific manipulations.

It is also interesting how Telia, AS1299 supported the incident propagation - the prefixes in routing anomaly almost coincide with the BGP session between Telia and Tulix, as it was dropped and re-established three times during the incident.

|

# |

First seen |

Last seen |

|

1 |

2020-07-30 06:51:00 |

2020-07-30 06:55:00 |

|

2 |

2020-07-30 06:08:00 |

2020-07-30 06:19:00 |

|

3 |

2020-07-30 01:36:00 |

2020-07-30 05:03:00 |

|

4 |

2020-07-30 01:16:00 |

2020-07-30 01:25:00 |

We suppose that Telia did not have any filtering established at the border with AS7219, accepting all the announcements that made trouble, up to the point where it was enough, and Telia left the upstream providers list for AS7219. If there was any AS_SET whitelist filter on Telia's side, there is no point in turning it off. Otherwise, it is quite unlikely to see the waves that we are observing here.

Conclusion? Do not try to optimize the routes with automated software - BGP is a distance-vector routing protocol that has proved, throughout the years, its ability to handle the traffic. Software, wanting to "optimize" the system involving thousands of members would never be smart enough to compute all the possible outcomes of such manipulation. At least this time, no one was hurt. However, too many times we saw how playing the "more specific" game leads companies, industries and in some cases entire nations to the Denial of Service state of the biggest, and most crucial, computer systems which are nowadays profoundly involved with human life.

If by the nature of your business you wish or need, to receive updates on such incidents in real-time - please mail us at radar@qrator.net.